COVID-19 Genomic Navigator

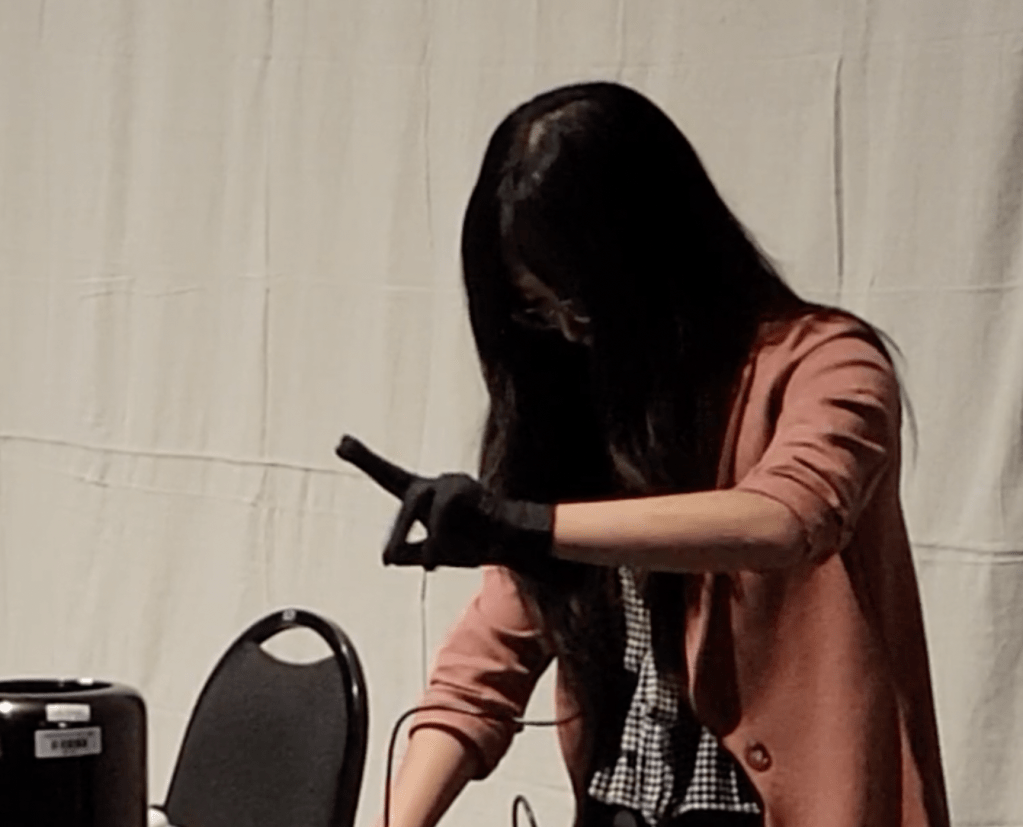

COVID-19 Genomic Navigator is a motion sensing glove that sonifies the protein genomic data of COVID-19, which was extracted from The National Center for Biotechnology Information (NCBI) and Protein Data Bank (PDB). The PDB files show the structure of the proteins at the atomic and amino acid scale, which contributes to the multi-dimensional parameter mapping of the synthesized sounds. Three sets of data were selected in the project, including 7B3C, 7D4F, and 7KRP, which are all RNA-dependent RNA polymerase (RdRp) related. It was formatted into prn or csv files and was read in Max3 patches, which sends OSC message to Kyma (Symbolic Sound)4, which performs audio signal processing in multiple synthesizers through parameter mapping. The three protein data sets distribute evenly in space while the motion sensing glove navigates and sonifies the data in both physical space and time synchronously. As shown in Figure 1, the motion sensing glove captures the data of hand gestures and movement, then sends from the Arduino to Max patch through OSC messages, which controls the graphical user interface (GUI) in Max that manages the parameters of the synthesizers in Kyma through OSC messages. The project also incorporate machine learning, which interprets the data from the glove, specifically.

Gesture recognition and dynamic time warping (DTW) from Wekinator of the motion sensing glove are used to perform machine learning and to catalyze the sonification of the interaction between the different protein data. The motion sensor data is sent from Max to Wekinator for data analysis. The output result of gesture recognition from Wekinator sends back to Max and trigger programming events to manifest the musical characteristics of the proteins through the control parameters of the synthesizers in Max. Both the protein genomic data and gestural data are reformatted and programmed to run into several synthesizers for audification and sonification. The data of five categorized glove gestures are labelled, recorded, analyzed, and trained in the machine learning model before the performance. When running in real-time, some gestures will not be categorized by the machine learning system. Non-categorized gestures would be used in musical expression alongside with the five categorized gestures to build the growth and development of the piece.